Episode Summary

In Episode 3, we tackle a classic data science pain point: the “last mile” problem. Nick Zervoudis and host Lior Barak encounter a real challenge for the first time during recording, a high-performing ML model stuck in development limbo while a sales team desperately needs its predictions to save customer relationships.

Problem Category: Machine Learning & AI Implementation

Runtime: 45 minutes

Recording Date: [Date]

The Problem

Submitted by: Maya (No family name or company 😞)

Industry Context: SaaS/Enterprise software with renewal-based revenue model

Problem Framework

Issue: High-accuracy churn prediction ML model (87% accuracy, 60-day warning) exists but remains trapped in Jupyter notebooks, making it impossible for the sales team to act on predictions and prevent customer churn

Trigger: Lost a major client that the model had flagged as high-risk six weeks earlier, but the sales team never received the alert due to a lack of systematic delivery from notebooks to Salesforce

Tension:

Renewal rate targets aren’t improving despite significant AI investment

Sales reps feel they’re “flying blind” without the promised insights

CEO pressure on the data science team about ROI and business impact

Team credibility is at stake

Boundaries:

The model itself works well (87% accuracy validated)

Data science team is capable - this isn’t about talent or training

Salesforce instance cannot be replaced

The sales team must stay in their primary Salesforce workflow

A budget exists for reasonable deployment solutions

Tech Stack: Python ML model in Jupyter Notebooks, Salesforce CRM, AWS infrastructure

Clarity Statement: “How can we enable our sales team to identify which customers are at risk of churning within their existing workflow?”

Our Guest

Nick Zervoudis

Data Product Management Consultant & Coach | Value from Data & AI

Nick helps data teams escape the reactive “service desk” trap and transition to value-creating data product organizations. After spending years bridging tech and business in data roles - from startups to consulting to corporate - he recently quit his Head of Product role at CKDelta (where he doubled annual revenue and achieved 5x ARR) to start Value from Data & AI.

Now he focuses on data product management training, consulting, and coaching, helping teams prove and maximize their ROI. Nick also co-hosts the “Data Product Management in Action” podcast, organizes Data & AI PM meetups in London and Barcelona, and was recently featured in Driven by Data Magazine with his article “From Order-Takers to Value Creators.”

Background:

Former Head of Product (Data & AI) at CKDelta - achieved 2x annual revenue and 5x ARR

Ex-PepsiCo Data Product Manager - built Store DNA platform that unlocked tens of millions in opportunities

Strategic Advisor at Mindfuel

MSc Management (Digital Business) from Imperial Business School

Based in Barcelona, Spain

Connect with Nick:

Blog:

Course - ROI of Data & AI: https://maven.com/nick-zervoudis/dpm-value-course

LinkedIn: https://www.linkedin.com/in/nzervoudis/

Youtube channel:

Co-host: Data Product Management in Action podcast

The Solution

Phased 90-Day Deployment Strategy

Phase 1: Days 1-30 - Validate & Test

Generate model predictions daily (CSV export from notebooks)

Implement human validation by domain experts (sales managers)

Begin acting on results to validate both the model AND the playbook

Critical Go/No-Go decision at Day 30: Is this actually helping?

Phase 2: Days 31-60 - Refine & Scale

Incorporate feedback and learnings into the model

Continue acting on evolved predictions

Implement AWS automation for daily runs

Embed dashboards with results

Set up Slack/email alerts as an intermediate delivery mechanism

Cost and revenue validation checkpoint

Phase 3: Days 61-90 - Production Integration

Build Salesforce API integration

Create custom views for the sales team workflow

Implement feedback loops

Final ROI assessment (clear, measurable success criteria)

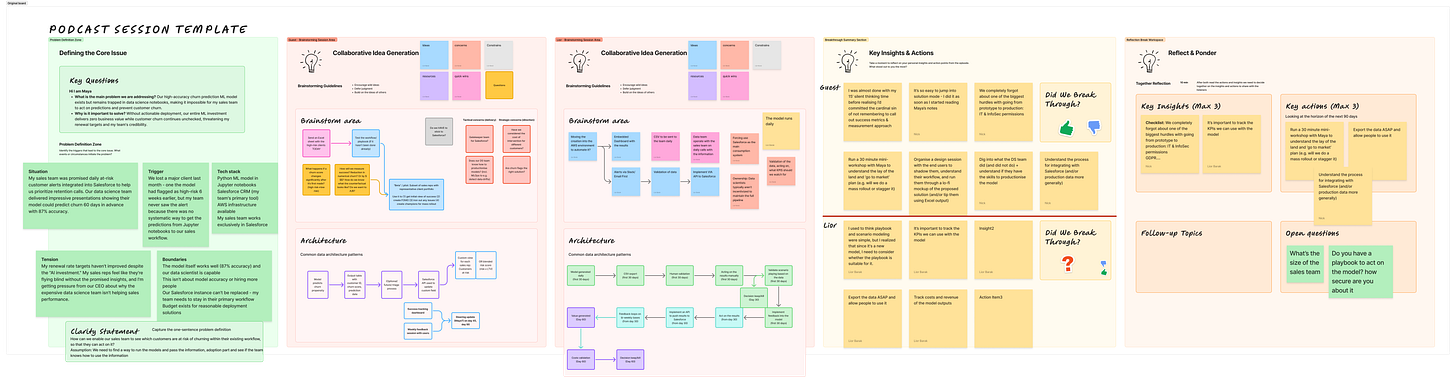

Visual Diagram

Key Takeaways

3 Critical Insights

The Checklist Blind Spot: Even experienced practitioners forget crucial stakeholders. Both Nick and Lior initially missed legal/GDPR compliance, IT security approvals, and Salesforce integration gatekeepers. Always run a standard checklist: IT access, privacy/legal compliance, data permissions, integration processes, security reviews.

Success Metrics Must Be Defined Upfront: An 87% accurate model means nothing without clarity on business impact. What does success look like? Preventing one major client loss? Hitting specific renewal targets? Reducing churn by X%? The metrics conversation needs to happen before deployment, not after.

Perfect is the Enemy of Good (and Fast): Waiting to build the perfect Salesforce integration while customers churn is malpractice. Export the CSV today. Send it manually. Let the sales team start learning. Quick wins build momentum and validate assumptions before investing in full automation.

4 Action Items

For Maya (and others facing similar ML deployment challenges):

Week 1: Run 30-Minute Mini-Workshop with Maya - Clarify success metrics, understand sales team workflow, validate assumptions about playbook effectiveness, and ensure alignment on what “breakthrough” means for this project

Week 1: Export Data ASAP - Don’t wait for perfect infrastructure. Generate CSV predictions and get them to sales team leaders immediately. Start the learning process today, not in 60 days.

Week 2: Understand Salesforce Integration Process - Meet with IT, Salesforce admins, and security teams to map the approval process, timeline, and requirements. This could be a 2-week or 6-month journey - find out early.

Week 2-4: Meet with End Users - Shadow sales reps, understand their actual workflow (not what Maya thinks it is), run mockups by them, and identify 2-3 champion users for initial pilot testing

Episode Highlights

03:00 - The “food truck vs. grandma’s dish” philosophy of data products

07:35 - Problem reveal and the shocking client loss trigger

14:13 - Crafting the clarity statement and debating scope

18:13 - The 15-minute solo brainstorm begins

20:48 - Nick’s “oh s%#%” moment: forgetting success metrics despite emphasizing their importance

25:29 - Lior’s phased architecture with built-in kill switches

30:27 - The GDPR revelation: “We completely forgot about legal!”

40:01 - Final verdict: Breakthrough achieved (with caveats)

What I Learned from Nick

As the host, I want to share three key insights from working through this problem with Nick that completely shifted my perspective:

1. The Art of Intentional Problem Understanding

Nick demonstrated something powerful: the discipline to pause before solving. He articulated how our brains naturally race toward solutions, but the real skill is channeling that energy into a deeper exploration of the problem first. This conscious approach transforms how you engage with challenges - instead of fighting your instinct to solve, you redirect it toward understanding.

2. Quick Wins Are Strategic Intelligence, Not Shortcuts

Nick brilliantly reframed what I initially thought was a temporary workaround. Sending that CSV isn’t settling for less - it’s strategic intelligence gathering. You’re validating assumptions, proving value, and learning how users actually work before investing heavily in infrastructure. This isn’t just pragmatic; it’s smart product thinking. The “quick win” becomes your reconnaissance mission.

3. The Strategic Checklist Mindset

Here’s what I loved about Nick’s approach to checklists: use them as guardrails for the essentials (legal, IT, security), but treat them as a foundation, not a ceiling. The checklist catches what you might miss in the complexity of the moment - it’s your safety net that frees you up to think more creatively about the unique aspects of each problem. It’s liberating, not limiting.

Bonus Insight: One of the most valuable moments was watching Nick, a data product management expert, catch himself forgetting about success metrics mid-brainstorm. Rather than being a weakness, it showed the power of systems and frameworks. Even masters need structure to maintain focus on what matters. It’s a reminder that expertise isn’t about being perfect; it’s about having the right tools to catch and correct yourself.

Resources Mentioned

Figma: Collaborative whiteboarding tool used during the brainstorming session

Value from Data and AI: Nick’s blog on data product management -

Maven Course: “ROI of Data & AI: How to demonstrate & maximise your impact” - https://maven.com/nick-zervoudis/dpm-value-course

Data Product Management in Action: Podcast co-hosted by Nick:

Continue the Conversation

Submit Your Data Problem

Have a challenge you’d like us to tackle? Use our structured framework to submit it

Become a Guest

Data practitioner interested in collaborative problem-solving? Apply here

Share Your Alternative Solution

Did this episode spark a different approach? Share it with the community:

Use #DataBreakthrough on social media

Reply to this newsletter

What Would YOU Do?

We’d love to hear from listeners who have:

Successfully deployed ML models to production

Navigated Salesforce integrations

Built churn prediction systems

Solved the “last mile” problem

Share your experiences and alternative approaches!

About Data Breakthroughs

Data Breakthroughs brings together data practitioners to solve real operational challenges through collaborative problem-solving. Each episode features authentic, unscripted brainstorming sessions where the host and guest encounter problems for the first time during recording, creating practical, implementable solutions.

Host: Lior Barak

Credits

Host & Producer: Lior Barak

Guest: Nick Zervoudis

Music: “Calisson” courtesy of Riverside

Visual Content: Figma collaboration board

Accessibility

Episode Transcript: Full transcript available above

Visual Diagrams: Figma board link provided; all visual content described verbally during episode

Disclaimer

This podcast is for inspiration and educational purposes. The solutions and approaches discussed are general frameworks meant to spark ideas and collaboration. Always adapt recommendations to your specific organizational context, constraints, and requirements. The goal is to have fun while exploring data challenges together!

Have feedback on this format or suggestions for future episodes? Reply to this newsletter or reach out on social media using #DataBreakthrough

Quick Listener Survey

Help us improve! Three quick questions:

What was your biggest takeaway from this episode?

Would you have approached Maya’s problem differently?

What data challenges would you like us to tackle next?

Reply with your answers or share on LinkedIn with #DataBreakthrough

Connect with Nick Zervoudis:

📝 Blog:

💼 LinkedIn: https://www.linkedin.com/in/nzervoudis/